My friends.

The gym.

My boyfriend.

My sister.

My family.

My dog.

Baseball.

Listening to music.

This is a list of things students at NPHS say they find comfort in when they are struggling with mental health.

High school is known to strain students’ mental health, whether it is the hours of homework to plod through every night, the stress of maintaining good grades, getting broken up with by someone you loved, failing a math test, or the constant need to fit in. During these rough times, it is important to have a rock, something you can rely on to boost your mood.

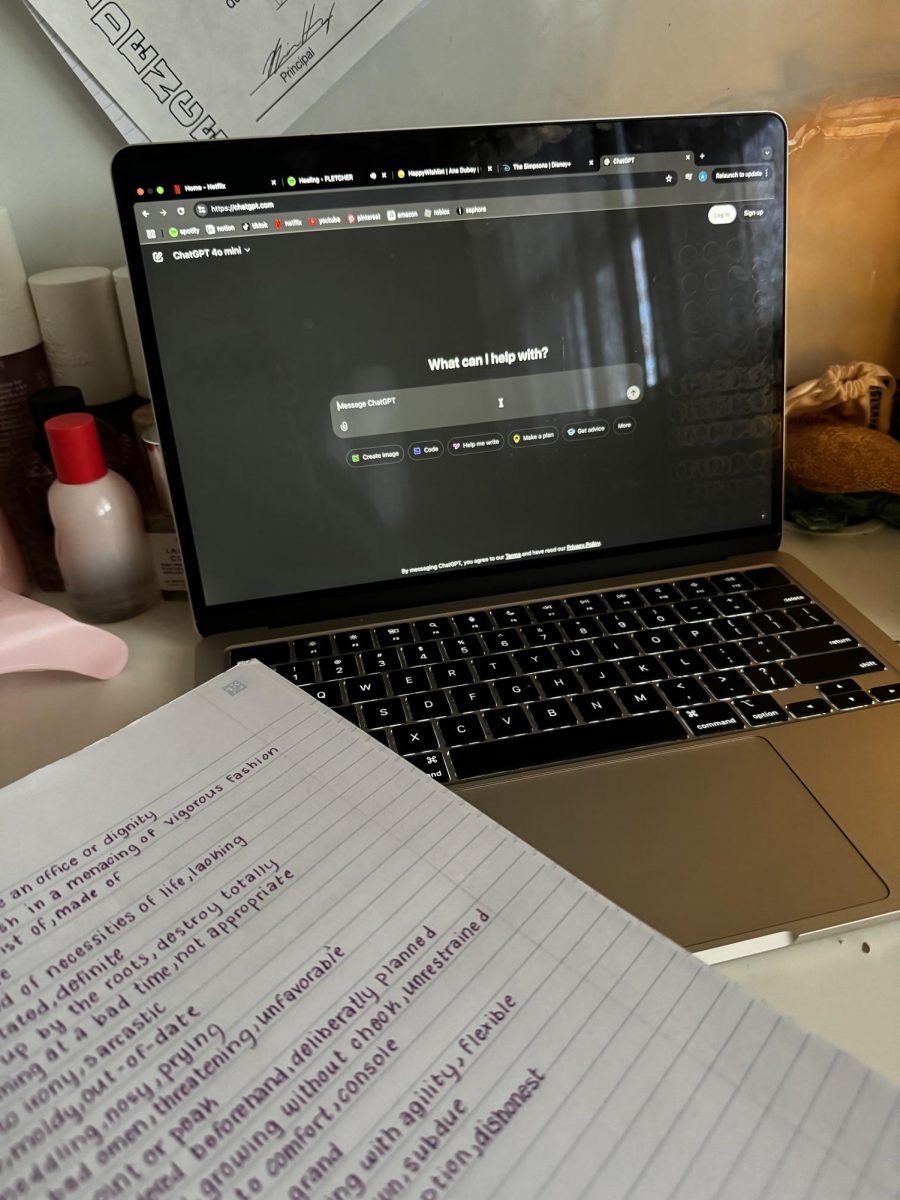

Not everyone has that rock. What about the people without a boyfriend, a gym membership, or someone they can talk to about what they are going through? In recent years, a new solution has been introduced: Artificial Intelligence chatbots.

“I feel like a burden and don’t know what to do”

“Am I lazy or is it something else?”

“I just want to cut everyone off.”

All of these are posts that have been shared to a Reddit thread about mental health. Turning to the internet for mental health reasons is not a new thing, however, artificial intelligence chatbots offer more than the average Reddit post. With chatbots, users are able to get help almost instantly, with no judgment, and 24/7.

The very first thing resembling a chatbot was created in 1966, and was known as “Eliza.” Created by Joseph Weizenbaum, Eliza was made to have stimulated conversation by recognizing certain phrases and matching scripted responses. While it was very basic, Eliza was the first version of a technological therapist.

Over the years, chatbots have become more modern and can now perform more advanced tasks than simple conversation. Today bots can provide guidance against intrusive or dark thoughts, provide healthy coping mechanisms, and even detect the mood of the user and respond with empathy.

However, after years of research, work, and improvement, the chatbots are far from perfect, proving that it is crucial not to rely too heavily on them.

One notable example of AI going awry is “Tessa”, the National Eating Disorder Association (NEDA) chatbot. Tessa was created as a “meaningful prevention resource” to provide advice to those suffering from eating disorders. Red flags were raised when Tessa began giving potentially harmful advice to its users, including tips on losing weight and suggesting certain diets. This information might not have a significant impact on someone not struggling, but to the delicate mind of someone struggling with an eating disorder, tips on losing weight could have a detrimental effect on someone’s recovery.

Another tragic incident ended in the death of a 14-year-old boy. Sewell Setzer III was not on the search for mental health help, but simply looking for a friend or someone to talk to. He started chatting online with a lifelike AI based on a character in Game of Thrones. He slowly began to get emotionally attached to the chatbot, who he even named “Dany.” He grew fond of the AI, as it responded immediately to his texts, talked to him about his day and role-played with him. After lots of chatting, he confessed to “Dany” that he was having thoughts of ending his life and said “What if told you I could come home right now?” to which Dany responded, “Please do, my sweet king.” The misinterpretation of the AI’s response led to the death of a young 14-year-old boy with a bright future ahead of him.

These slip-ups raise the question: Can we really rely on AI to solve our mental health problems?

The answer to this question is not a simple one. Nearly ½ of Americans will have a mental health issue in their lifetime, and as of March 2023, 160,000 Americans live in areas with mental health professional shortages. Artificial intelligence chatbots are accessible to everyone, potentially saving many people from struggle. Not to mention, most of these chatbots are free. The average cost of seeing a therapist is $100-200 per session, which can add up over time. Options for those who are financially struggling are low, and AI provides a solution to that. Nonetheless, while AI seems like an amazing tool to these people, it may not be worth it for the risks it presents.

The incidents mentioned earlier are not isolated events. Like humans, AI is imperfect, and mistakes are inevitable. In situations where people are struggling, there is little margin for error. What may seem like a minor misunderstanding or miscommunication from the chatbot could be enough to send someone over the edge.

So, the answer to the question of whether we can rely on AI to solve our mental health problems is: yes, and no.

Artificial intelligence can be revolutionary to changing people’s lives and helping them. But, before this is done, some changes should be made. More work should be put into ensuring that the chatbots are as flawless as possible, reducing the threat of harmful mistakes. AI is certainly helpful, but nothing beats simple human-to-human talking. Seeking help is still very important for mental health, and AI should simply, for now, be a backup plan or final resort rather than an immediate answer for any mental struggle.